Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

Issue release: 30 June 2025

This research aims at assessing how significant cybersecurity issues affect Metaverse coin price and trading volume with particular regard to the five greatest assets from 2017 till 2024. The study uses event study and impulse response analysis to examine the impact of MANA (Decentraland), SAND (The Sandbox), AXS (Axie Infinity Shards), ENJ (Enjin Coin) and GALA (Gala Games) on nine major security incidents like the Coincheck and Ronin hacks. Dependent variables include the prices and volumes of coins in the Metaverse, while prices of Bitcoin and Ethereum serve as the main independent variables to control for price activity. The results show short-lived but strong effects, depending on event intensity and platform specifics. The Coincheck hack caused a 4.9% price drop and 22% volume decline over 10 days, while BtcTurk had a smaller impact. The current paper enlarges the body of research addressing the cryptocurrency sector’s market stability with the new knowledge about the risks control and investments into the fresh classes of assets.

Issue release: 30 June 2025

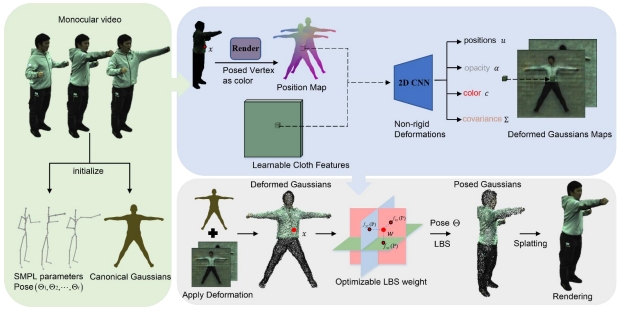

Reconstructing the human body from monocular video input presents significant challenges, including a limited field of view and difficulty in capturing non-rigid deformations, such as those associated with clothing and pose variations. These challenges often compromise motion editability and rendering quality. To address these issues, we propose a cloth-aware 3D Gaussian splatting approach that leverages the strengths of 2D convolutional neural networks (CNNs) and 3D Gaussian splatting for high-quality human body reconstruction from monocular video. Our method parameterizes 3D Gaussians anchored to a human template to generate posed position maps that capture pose-dependent non-rigid deformations. Additionally, we introduce Learnable Cloth Features, which are pixel-aligned with the posed position maps to address cloth-related deformations. By jointly modeling cloth and pose-dependent deformations, along with compact, optimizable linear blend skinning (LBS) weights, our approach significantly enhances the quality of monocular 3D human reconstructions. We also incorporate carefully designed regularization techniques for the Gaussians, improving the generalization capability of our model. Experimental results demonstrate that our method outperforms state-of-the-art techniques for animatable avatar reconstruction from monocular inputs, delivering superior performance in both reconstruction fidelity and rendering quality.

Issue release: 30 June 2025

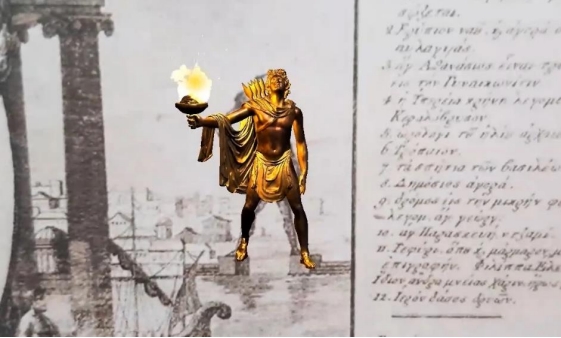

We can define the period we are living in as the last step of Society 5.0 (Super Intelligent Society) dominated by unmanned technologies and the first step of Society 6.0 Ecological Revolution Super Information Society. In the last step of 5.0, the new online lifestyle with the Internet of Things, interfaces, big data, robotics stages, the Digital Big Bang: Metaverse! takes place in our lives day by day. Today, humanity is faced with many problems that disrupt the ecological balance such as climate change, environmental pollution, radioactive pollution, industrial wastes, unplanned urbanization, which can be described as negative actions that lay the foundations of the Society 6.0 Ecological Revolution Super Information Society. Undoubtedly, one of the most important of these problems is the negative change in our understanding of ethics. There is an active role for science in the development of digital technologies, but there is also a need for an active role for social sciences in determining the cultural, sociological and psychological effects of technologies on individuals. With the Culture Metaverse to be established in the new digital life metaverse, it is possible to remind people of the ethical values that humanity has forgotten, to determine the ethical rules to be followed in the universes where humanity will pass with prototype avatars, in the colorful lives they will lead, in brand new experiences, and to create a digital ethical plane. The definition, content and function of culture within the research area of folklore discipline; cultural drilling, comparing different cultures, analysis and synthesis. In this respect, in this new digital structuring where the cultural ergonomics process will be experienced, designing a metaverse with cultural content is within the research areas of folklore discipline and folklorists. For this reason, there will be a great need for Turkish folklorists in the trained staff who will structure the Turkish Culture Metaverse. In this study, the concept of Digital Big Bang: Metaverse! The concept is examined with the synthesis of science and social sciences with the Folkloric Metaethical Theory, which I put forward with interdisciplinarity in the fields of folklore and metaethics, and the method of analysis of my theory, the Folkloric Grounding Metaethical Analysis Method. The positive/negative effects of the concept of metaverse (beyond the universe) were investigated. Based on the universal ethical philosophy of the Book of Dede Korkut, the culture metaverse was designed and the name “metaethicalverse”, which I gave to the ethical understanding of the culture metaverse, was exemplified by how to define the designed metaverse with the ethical code of Dede Korkut. Different concepts and designs of the Dede Korkut Culture Metaverse are presented. Our study, as the first study to examine the metaverse from a folkloric perspective, aims to address the disciplines of folklore and ethics at the metaethical level and to adapt them to digital universes, to interpret Dede Korkut’s ethical philosophy with a universal perspective and to reconstruct it in the metaverse environment, and to draw attention to the new goals to be achieved by folklorists who will undertake the role of the quarterback of the digital age.

Issue release: 30 June 2025

In recent times, the concept of smart cities has gained traction as a means to enhance the utilization of urban resources, delivering sustainable services across sectors like energy, transportation, healthcare, and education. Applications for smart cities leverage information and communication technologies (ICT), particularly the Internet of Things (IoT), cloud computing, and fog computing. By merging these technologies with platforms like the metaverse, cities can improve service delivery and create more engaging experiences for residents. The metaverse, in this context, serves as a virtual space for interactions between citizens and city authorities, contributing positively to urban planning and management. Nonetheless, data security and privacy remain significant challenges for smart cities implementing these technologies. To address this, blockchain-based security solutions can be effective in supporting sustainable smart city applications. By integrating IoT, fog computing, and cloud services into a blockchain framework, it’s possible to establish a secure and advanced platform for the creation and deployment of applications aimed at sustainable urban development. This paper proposes a conceptual model that combines IoT, fog, and cloud technologies within a blockchain structure, enabling smart city applications to harness the strengths of each technology, thereby optimizing operations, improving service quality, and ensuring robust security.

Issue release: 30 June 2025

The bioinformation metaverse proposed in this paper is founded on bioinformatics, which takes the big data of biology to collect, filter, display the visual, calculate, merge, simulate, and optimize the big biology problem during the analysis process with the application of high-end information-processing technologies to solve the high-dimension, high-complexity problem for the bioinformation metaverse field. It can realize multi-scale traversal from atomic layers and molecules to proteins and complete biological systems. They are important for bioinformation metaverse construction and development. Multi-source data fusion and analytics methods are important in efficiently interpreting high-dimensional and high-complexity biological systems from atomic layers to molecules. In this paper, the application of the metaverse in six aspects (molecular manipulation, biological perception, protein structure study, decentralized technology, interactive simulation, and visualization) is analyzed further, highlighting the significance of the bioinformation metaverse for bioinformatics research and development. The multi-layered and closely connected biological information system can be built up to make life-science study and technology research more efficient.

The first Metaverse Scientist Forum was successfully convened virtually on March 7, 2025, from 14:30 to 16:00. This pioneering session featured distinguished presentations by Prof. Adrian David Cheok from Nanjing University of Information Science and Technology and Dr. Shashi Kant Gupta of Eudoxia Research University. Academic leadership was provided by Prof. Pan Zhigeng, Dean of the Artificial Intelligence School at Nanjing University of Information Science and Technology, who chaired the forum, while Dr. Wang Wenxiao, a doctoral supervisor from Macau University of Science and Technology, as invited moderator.

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

Open Access

Open Access

.jpg)

.jpg)