Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

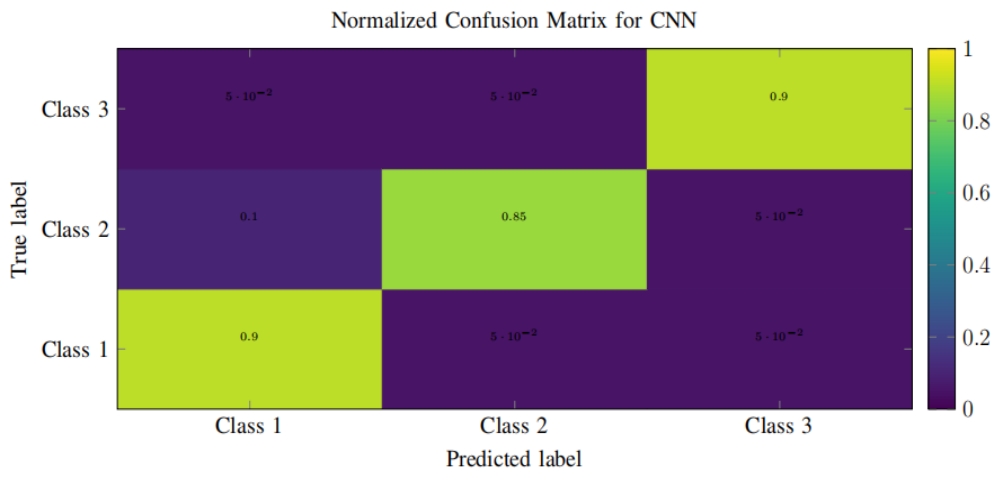

We are pleased to present a curated collection of groundbreaking articles authored by some of the World Top 2% Scientists in their respective fields. These articles delve into a diverse array of topics, including gaming, computer graphics, virtual reality, generative AI, cybersecurity, and machine learning. Each article provides unique insights and cutting-edge research that collectively enhance our understanding of the Metaverse and its potential applications. Below is a brief overview of the seven featured articles. 1. Survey on haptic technologies for virtual reality applications during COVID-19 Author of 2021-2023 Top 2% Scientist: Prof. Zhigeng Pan Author of 2021-2023 Top 2% Scientist: Prof. Adrian David Cheok Abstract: This paper presents a comprehensive survey on the advancements and applications of haptic technologies, which are methods that facilitate the sense of touch and movement, in virtual reality (VR) during the COVID-19 pandemic. It aims to identify and classify the various domains in which haptic technologies have been utilized or can be adapted to combat the unique challenges posed by the pandemic or public health emergencies in general. Existing reviews and surveys that concentrate on the applications of haptic technologies during the Covid-19 pandemic are often limited to specific domains; this survey strives to identify and consolidate all application domains discussed in the literature, including healthcare, medical training, education, social communication, and fashion and retail. Original research and review articles were collected from the Web of Science Core Collection as the main source, using a combination of keywords (like ‘haptic’, ‘haptics’, ‘touch interface’, ‘tactile’, ‘virtual reality’, ‘augmented reality’, ‘Covid-19’, and ‘pandemic’) and Boolean operators to refine the search and yield relevant results. The paper reviews various haptic devices and systems and discusses the technological advancements that have been made to offer more realistic and immersive VR experiences. It also addresses challenges in haptic technology in VR, including fidelity, ethical, and privacy considerations, and cost and accessibility issues. 2. The role of generative AI in cyber security Author of 2021-2023 Top 2% Scientist: Prof. Kevin Curran Abstract: In the ever-evolving landscape of cyber threats, the integration of Artificial Intelligence (AI) has become popular into safeguarding digital assets and sensitive information for organisations throughout the world. This evolution of technology has given rise to a proliferation of cyber threats, necessitating robust cybersecurity measures. Traditional approaches to cybersecurity often struggle to keep pace with these rapidly evolving threats. To address this challenge, Generative Artificial Intelligence (Generative AI) has emerged as a transformative sentinel. Generative AI leverages advanced machine learning techniques to autonomously generate data, text, and solutions, and it holds the potential to revolutionize cybersecurity by enhancing threat detection, incident response, and security decision-making processes. We explore here the pivotal role that Generative AI plays in the realm of cybersecurity, delving into its core concepts, applications, and its potential to shape the future of digital security. 3. Deciphering avian emotions: A novel AI and machine learning approach to understanding chicken vocalizations Author of 2021-2023 Top 2% Scientist: Prof. Adrian David Cheok Abstract: In this groundbreaking study, we present a novel approach to interspecies communication, focusing on the understanding of chicken vocalizations. Leveraging advanced mathematical models in artificial intelligence (AI) and machine learning, we have developed a system capable of interpreting various emotional states in chickens, including hunger, fear, anger, contentment, excitement, and distress. Our methodology employs a cutting-edge AI technique we call Deep Emotional Analysis Learning (DEAL), a highly mathematical and innovative approach that allows for the nuanced understanding of emotional states through auditory data. DEAL is rooted in complex mathematical algorithms, enabling the system to learn and adapt to new vocal patterns over time. We conducted our study with a sample of 80 chickens, meticulously recording and analyzing their vocalizations under various conditions. To ensure the accuracy of our system’s interpretations, we collaborated with a team of eight animal psychologists and veterinary surgeons, who provided expert insights into the emotional states of the chickens. Our system demonstrated an impressive accuracy rate of close to 80%, marking a significant advancement in the field of animal communication. This research not only opens up new avenues for understanding and improving animal welfare but also sets a precedent for further studies in AI-driven interspecies communication. The novelty of our approach lies in its application of sophisticated AI techniques to a largely unexplored area of study. By bridging the gap between human and animal communication, we believe our research will pave the way for more empathetic and effective interactions with the animal kingdom.

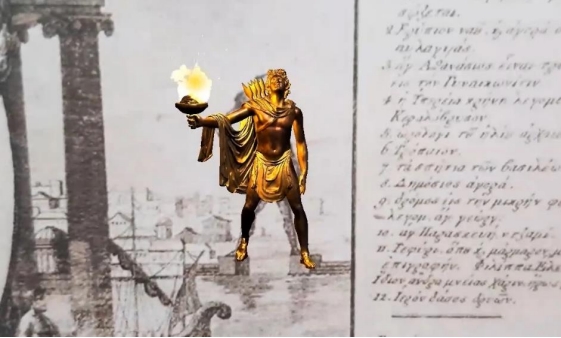

4. Computer vision meets metaverse Author of 2022-2023 Top 2% Scientist: Prof. George A. Papakostas Abstract: This comprehensive analysis delves into the historical progression and important technological and contemporary advancements of computer vision inside the metaverse. The metaverse, which can be characterized as an interactive virtual reality environment that mirrors the physical world, signifies a novel domain for the utilization of computer vision in various applications. These applications span from object identification and tracking to gesture recognition and augmented reality. Additionally, a thorough evaluation of specific case studies occurs to provide a deeper understanding of the subject. Despite notable progress, the incorporation and utilization of computer vision inside the metaverse present numerous obstacles, including computational expenses, apprehensions regarding data privacy, and the faithful replication of physical aspects of reality. Potential solutions are examined, including deep learning approaches, optimization strategies, and the formation of ethical guidelines. A comprehensive analysis of anticipated patterns within the industry is also included, with emphasis on the confluence of artificial intelligence, the Internet of Things, and blockchain technologies. These convergences are predicted to create substantial prospects for the advancement of the metaverse. This review culminates by offering a contemplation on the ethical considerations and duties that arise from the utilization of computer vision in the realm beyond mortal existence. Research findings demonstrate that these technologies have greatly augmented user engagement and immersion within the digital domain. Readers can enhance their understanding of the interdependent connection between computer vision and the metaverse through the present analysis of existing scholarly works. Thus, this study aspires to make a valuable contribution to the advancement of research in this new domain. 5. From Three-Body game to Metaverse Author of 2021-2023 Top 2% Scientist: Assoc. Prof. Zhihan Lv Abstract: This paper aims to study the concept of the metaverse as reflected in the Three-Body game in the TV series The Three-Body Problem, as well as the current development status of the metaverse in the real world. Firstly, the Three-Body game in The Three-Body problem is analyzed and explained to uncover the underlying metaverse knowledge. Then, through a literature review, the implementation and application scenarios of the metaverse in various industries are investigated from the databases Web of Science and Scopus, using keywords such as “metaverse”, “Three-Body”, “virtual world”, “virtual reality games”, and “human-computer interaction”. Nearly 10,000 relevant articles were retrieved, and 20 articles were selected for in-depth qualitative and quantitative analysis. Subsequently, the content of the literature is summarized from three aspects: the current development status of the metaverse, advancements in virtual reality technology, and advancements in human-computer interaction technology. The application status and technological progress of the metaverse in various industries and the existing technological limitations are discussed. By extending the concept of the metaverse from science fiction, this paper provides research ideas for the future development of the metaverse.

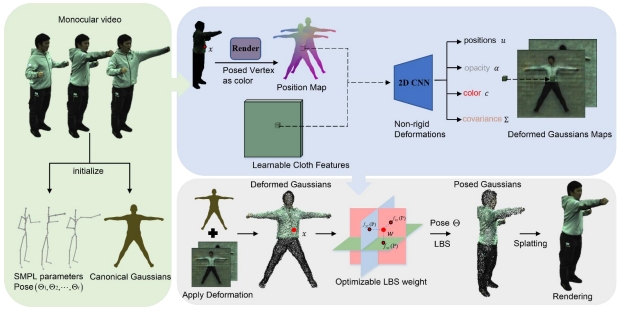

6. From motion to magic: Real-time virtual-real stage effects via 3D motion capture Author of 2023 Top 2% Scientist: Prof. Xun Wang Abstract: Immersive cultural performances with virtual-real fusion effects are the future development trend in the exhibition and stage industry. However, current virtual-real stage performances heavily rely on traditional sequential design and arrangements. During the performance, actors must move to specific positions based on the musical beat and execute predetermined actions with a pre-designed amplitude and frequency to synchronize with the fixedly played stage visual effects; otherwise, major performance accidents such as plot inconsistencies or continuity errors may occur. To address the problem, this paper introduces a real-time generation system for stage visual effects based on multi-view multi-person 3D motion capture. The system utilizes multi-view 3D motion capture technique to achieve non-intrusive real-time interaction perception of target actors in the stage space. By perceiving the spatial position and performance actions of the target actors, corresponding stage visual effects are generated in real-time. This is followed by the seamless integration of sound effects and immersive high-definition display, ultimately realizing multidimensional real-time interaction between real actors and virtual visual effects in the stage space. We conducted an experimental virtual-real stage performance, lasting approximately two minutes, in a physical theater to validate the effectiveness of our proposed method. The experiment not only produced a unique innovative effect of blending stage and technology but also effectively enhanced the sense of presence and interactivity of the stage performance, providing actors with more freedom and control in their performances.

7. Text to video generation via knowledge distillation Author of 2023 Top 2% Scientist: Prof. Chunxia Xiao Abstract: Text-to-video generation (T2V) has recently attracted more attention due to the wide application scenarios of video media. However, compared with the substantial advances in text-to-image generation (T2I), the research on T2V remains in its early stage. The difficulty mainly lies in maintaining the text-visual semantic consistency and the video temporal coherence. In this paper, we propose a novel distillation and translation GAN (DTGAN) to address these problems. First, we leverage knowledge distillation to guarantee semantic consistency. We distill text-visual mappings from a well-performing T2I teacher model and transfer it to our DTGAN. This knowledge serves as shared abstract features and high-level constraints for each frame in the generated videos. Second, we propose a novel visual recurrent unit (VRU) to achieve video temporal coherence. The VRU can generate frame sequences as well as process the temporal information across frames. It enables our generator to act as a multi-modal variant of the language model in neural machine translation task, which iteratively predicts the next frame based on the input text and the previously generated frames. We conduct experiments on two synthetic datasets (SBMG and TBMG) and one real-world dataset (MSVD). Qualitative and quantitative comparisons with state-of-the-art methods demonstrate that our DTGAN can generate results with better text-visual semantic consistency and temporal coherence.

|

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

.jpg)

.jpg)