Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

From motion to magic: Real-time virtual-real stage effects via 3D motion capture

Vol 4, Issue 2, 2023

Download PDF

Abstract

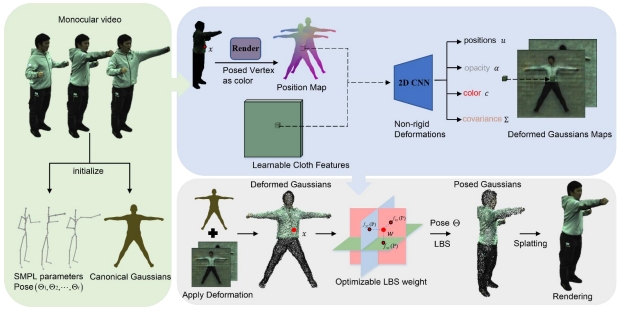

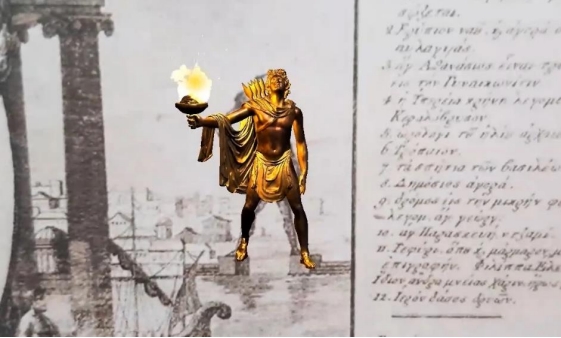

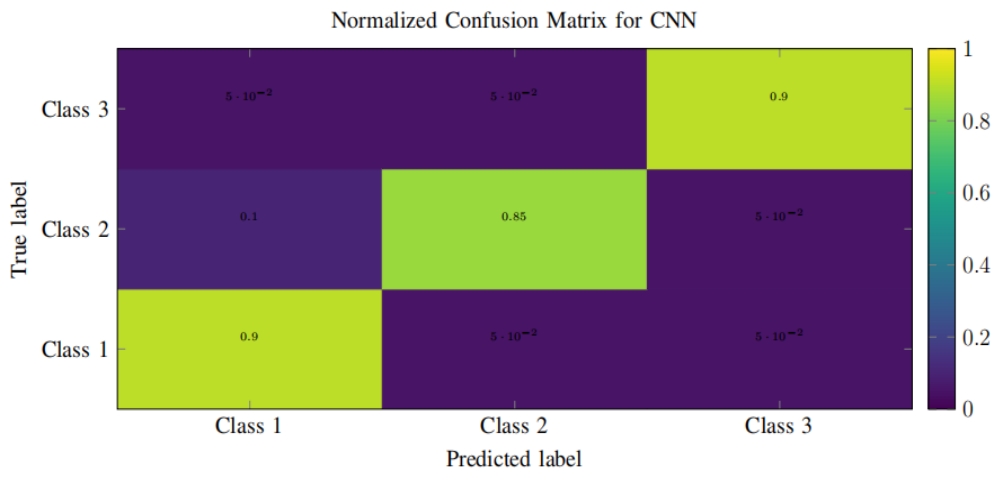

Immersive cultural performances with virtual-real fusion effects are the future development trend in the exhibition and stage industry. However, current virtual-real stage performances heavily rely on traditional sequential design and arrangements. During the performance, actors must move to specific positions based on the musical beat and execute predetermined actions with a pre-designed amplitude and frequency to synchronize with the fixedly played stage visual effects; otherwise, major performance accidents such as plot inconsistencies or continuity errors may occur. To address the problem, this paper introduces a real-time generation system for stage visual effects based on multi-view multi-person 3D motion capture. The system utilizes multi-view 3D motion capture technique to achieve non-intrusive real-time interaction perception of target actors in the stage space. By perceiving the spatial position and performance actions of the target actors, corresponding stage visual effects are generated in real-time. This is followed by the seamless integration of sound effects and immersive high-definition display, ultimately realizing multidimensional real-time interaction between real actors and virtual visual effects in the stage space. We conducted an experimental virtual-real stage performance, lasting approximately two minutes, in a physical theater to validate the effectiveness of our proposed method. The experiment not only produced a unique innovative effect of blending stage and technology but also effectively enhanced the sense of presence and interactivity of the stage performance, providing actors with more freedom and control in their performances.

Keywords

References

- Bishop G, Hill C. Self-Tracker: A Smart Optical Sensor on Silicon [PhD thesis]. The University of North Carolina at Chapel Hill; 1984.

- Chen K, Wang Y, Zhang S-H, et al. MoCap-solver: A neural solver for optical motion capture data. ACM Transactions on Graphics 2021; 40(4): 1–11. doi: 10.1145/3450626.3459681

- Woltring HJ. New possibilities for human motion studies by real-time light spot position measurement. Biotelemetry 1974; 1(3): 132–146.

- Yokokohji Y, Kitaoka Y, Yoshikawa T. Motion capture from demonstrator’s viewpoint and its application to robot teaching. In: Proceedings of the 2002 IEEE International Conference on Robotics and Automation; 11–15 May 2002; Washington, DC, USA. pp. 1551–1558. doi: 10.1109/ROBOT.2002.1014764

- Anisfield N. Ascension technology puts spotlight on DC field magnetic motion tracking. HP Chronicle 2000; 17(9).

- Miller N, Jenkins OC, Kallmann M, Mataric MJ. Motion capture from inertial sensing for untethered humanoid teleoperation. In: Proceedings of the 4th IEEE/RAS International Conference on Humanoid Robots; 10–12 November 2004; Santa Monica, CA, USA. pp. 547–565. doi: 10.1109/ICHR.2004.1442670

- Yi X, Zhou Y, Habermann M, et al. Physical inertial poser (PIP): Physics-aware real-time human motion tracking from sparse inertial sensors. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 18–24 June 2022; New Orleans, LA, USA. pp. 13157–13168. doi: 10.1109/CVPR52688.2022.01282

- Hazas M, Ward A. A novel broadband ultrasonic location system. In: Borriello G, Holmquist LE (editors). UbiComp 2002: Ubiquitous Computing, Proceedings of the 4th International Conference on Ubiquitous Computing; 29 September–1 October 2002; Göteborg, Sweden. Springer-Verlag Berlin; 2002. Volume 2498, pp. 264–280. doi: 10.1007/3-540-45809-3_21

- Lai J, Luo C. AcousticPose: Acoustic-based human pose estimation. In: Cui L, Xie X (editors). Wireless Sensor Networks, Proceedings of the 15th China Conference on Wireless Sensor Networks; 22–25 October 2021; Guilin, China. Springer Singapore; 2021. Volume 1509, pp. 57–69. doi: 10.1007/978-981-16-8174-5_5.

- Laurijssen D, Truijen S, Saeys W, et al. An ultrasonic six degrees-of-freedom pose estimation sensor. IEEE Sensors Journal 2017; 17(1): 151–159. doi: 10.1109/JSEN.2016.2618399

- Foxlin E, Harrington M, Pfeifer G. ConstellationTM: A wide-range wireless motion-tracking system for augmented reality and virtual set applications. In: Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques; 19–24 July 1998; Orlando, Florida, USA. pp. 371–378. doi: 10.1145/280814.280937

- von Marcard T, Henschel R, Black MJ, et al. Recovering accurate 3D human pose in the wild using IMUs and a moving camera. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (editors). Computer Vision—ECCV 2018, Proceedings of the 15th European Conference on Computer Vision; 8–14 September 2018; Munich, Germany. Springer Cham; 2018. Volume 11214, pp. 614–631. doi: 10.1007/978-3-030-01249-6_37

- Kanazawa A, Zhang JY, Felsen P, Malik J. Learning 3d human dynamics from video. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 15–20 June 2019; Long Beach, CA, USA. pp. 5614–5623. doi: 10.1109/CVPR.2019.00576

- Schreiner P, Perepichka M, Lewis H, et al. Global position prediction for interactive motion capture. Proceedings of the ACM on Computer Graphics and Interactive Techniques 2021; 4(3): 1–16. doi: 10.1145/3479985

- Zhang Z, Siu K, Zhang J, et al. Leveraging depth cameras and wearable pressure sensors for full-body kinematics and dynamics capture. ACM Transactions on Graphics 2014; 33(6): 1–14. doi: 10.1145/2661229.2661286

- Cheng Y, Wang B, Yang B, Tan RT. Monocular 3D multi-person pose estimation by integrating top-down and bottom-up networks. In: Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 20–25 June 2021; Nashville, TN, USA. pp. 7645–7655. doi: 10.1109/CVPR46437.2021.00756

- Zhang Y, An L, Yu T, et al. 4D association graph for realtime multi-person motion capture using multiple video cameras. In: Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 13–19 June 2020; Seattle, WA, USA. pp. 1321–1330. doi: 10.1109/CVPR42600.2020.00140

- Zhou Z, Shuai Q, Wang Y, et al. QuickPose: Real-time multi-view multi-person pose estimation in crowded scenes. In: Proceedings of SIGGRAPH ’22: Special Interest Group on Computer Graphics and Interactive Techniques Conference; August 7–11 2022; Vancouver, BC, Canada. pp. 1–9. doi: 10.1145/3528233.3530746

- Yang W, Li Y, Xing S, et al. Lightweight multi-person motion capture system in the wild (Chinese). SCIENTIA SINICA Informationis 2023. doi: 10.1360/SSI-2022-0397

- Duan H, Wang J, Chen K, Lin D. PYSKL: Towards good practices for skeleton action recognition. In: Proceedings of the 30th ACM International Conference on Multimedia; 10–14 October 2022; Lisboa, Portugal. pp. 7351–7354. doi: 10.1145/3503161.3548546

Supporting Agencies

Zhejiang Provincial Natural Science Foundation of China under Grant No. LY21F020010; Key R&D Program of China (2018YFB1404102)

Copyright (c) 2023 Xiongbin Lin, Xun Wang, Wenwu Yang

This work is licensed under a Creative Commons Attribution 4.0 International License.

This site is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0).

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

.jpg)

.jpg)