Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

Text to video generation via knowledge distillation

Vol 5, Issue 1, 2024

Download PDF

Abstract

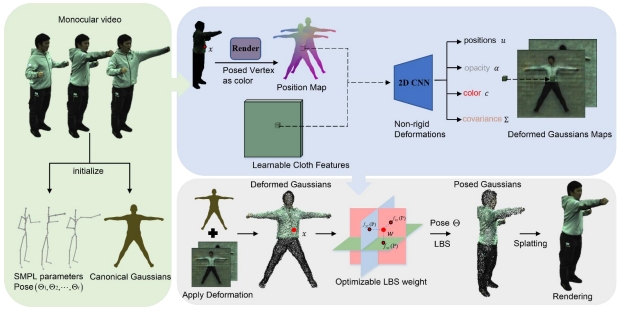

Text-to-video generation (T2V) has recently attracted more attention due to the wide application scenarios of video media. However, compared with the substantial advances in text-to-image generation (T2I), the research on T2V remains in its early stage. The difficulty mainly lies in maintaining the text-visual semantic consistency and the video temporal coherence. In this paper, we propose a novel distillation and translation GAN (DTGAN) to address these problems. First, we leverage knowledge distillation to guarantee semantic consistency. We distill text-visual mappings from a well-performing T2I teacher model and transfer it to our DTGAN. This knowledge serves as shared abstract features and high-level constraints for each frame in the generated videos. Second, we propose a novel visual recurrent unit (VRU) to achieve video temporal coherence. The VRU can generate frame sequences as well as process the temporal information across frames. It enables our generator to act as a multi-modal variant of the language model in neural machine translation task, which iteratively predicts the next frame based on the input text and the previously generated frames. We conduct experiments on two synthetic datasets (SBMG and TBMG) and one real-world dataset (MSVD). Qualitative and quantitative comparisons with state-of-the-art methods demonstrate that our DTGAN can generate results with better text-visual semantic consistency and temporal coherence.

Keywords

References

- Dong X, Long C, Xu W, et al. Dual Graph Convolutional Networks with Transformer and Curriculum Learning for Image Captioning. Proceedings of the 29th ACM International Conference on Multimedia. Published online October 17, 2021. doi: 10.1145/3474085.3475439

- Fang F, Yi M, Feng H, et al. Narrative Collage of Image Collections by Scene Graph Recombination. IEEE Transactions on Visualization and Computer Graphics. 2018; 24(9): 2559-2572. doi: 10.1109/tvcg.2017.2759265

- Fang F, Luo F, Zhang HP, et al. A Comprehensive Pipeline for Complex Text-to-Image Synthesis. Journal of Computer Science and Technology. 2020; 35(3): 522-537. doi: 10.1007/s11390-020-0305-9

- Balaji Y, Min MR, Bai B, et al. Conditional GAN with Discriminative Filter Generation for Text-to-Video Synthesis. Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence. Published online August 2019. doi: 10.24963/ijcai.2019/276

- Deng K, Fei T, Huang X, et al. IRC-GAN: Introspective Recurrent Convolutional GAN for Text-to-video Generation. Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence. Published online August 2019. doi: 10.24963/ijcai.2019/307

- Zhang H, Xu T, Li H, et al. StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2019; 41(8): 1947-1962. doi: 10.1109/tpami.2018.2856256

- Xu T, Zhang P, Huang Q, et al. AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Published online June 2018. doi: 10.1109/cvpr.2018.00143

- Zhang Z, Xie Y, Yang L. Photographic Text-to-Image Synthesis with a Hierarchically-Nested Adversarial Network. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Published online June 2018. doi: 10.1109/cvpr.2018.00649

- Pan Y, Qiu Z, Yao T, et al. To Create What You Tell. Proceedings of the 25th ACM international conference on Multimedia. Published online October 23, 2017. doi: 10.1145/3123266.3127905

- Chen Q, Wu Q, Chen J, et al. Scripted Video Generation With a Bottom-Up Generative Adversarial Network. IEEE Transactions on Image Processing. 2020; 29: 7454-7467. doi: 10.1109/tip.2020.3003227

- Mazaheri A, Shah M. Video Generation from Text Employing Latent Path Construction for Temporal Modeling. 2022 26th International Conference on Pattern Recognition (ICPR). Published online August 21, 2022. doi: 10.1109/icpr56361.2022.9956706

- Sutskever I, Vinyals O, Le QV. Sequence to sequence learning with neural networks. Advances in neural information processing systems. 2014.

- Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate. arXiv. 2014; arXiv:1409.0473.

- Reed S, Akata Z, Yan X, et al. Generative adversarial text to image synthesis. International Conference on Machine Learning. PMLR 2016.

- Kim D, Joo D, Kim J. TiVGAN: Text to Image to Video Generation With Step-by-Step Evolutionary Generator. IEEE Access. 2020; 8: 153113-153122. doi: 10.1109/access.2020.3017881

- Li Y, Min M, Shen D, et al. Video Generation From Text. Proceedings of the AAAI Conference on Artificial Intelligence. 2018; 32(1). doi: 10.1609/aaai.v32i1.12233

- Yamamoto S, Tejero-de-Pablos A, Ushiku Y, et al. Conditional video generation using actionappearance captions. arXiv preprint arXiv:1812.01261 (2018).

- Tulyakov S, Liu MY, Yang X, et al. MoCoGAN: Decomposing Motion and Content for Video Generation. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Published online June 2018. doi: 10.1109/cvpr.2018.00165

- Liu Y, Wang X, Yuan Y, et al. Cross-Modal Dual Learning for Sentence-to-Video Generation. Proceedings of the 27th ACM International Conference on Multimedia. Published online October 15, 2019. doi: 10.1145/3343031.3350986

- Gupta T, Schwenk D, Farhadi A, et al. Imagine this! scripts to compositions to videos. Proceedings of the European conference on computer vision (ECCV). 2018.

- Wu C, Huang L, Zhang Q, et al. Godiva: Generating open-domain videos from natural descriptions. arXiv preprint arXiv:2104.14806 (2021).

- Wu C. “N” uwa: visual synthesis pre-training for neural visual world creation. arXiv preprint arXiv:2111.12417 (2021).

- Hinton G, Vinyals O, Dean J. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015).

- Aguinaldo A, Chiang P Y, Gain A, et al. Compressing gans using knowledge distillation. arXiv preprint arXiv:1902.00159 (2019).

- Johnson J, Alahi A, Li FF. Perceptual losses for real-time style transfer and super-resolution. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam; 11–14 October, 2016; The Netherlands. 2016.

- Chen H, Wang Y, Shu H, et al. Distilling Portable Generative Adversarial Networks for Image Translation. Proceedings of the AAAI Conference on Artificial Intelligence. 2020; 34(04): 3585-3592. doi: 10.1609/aaai.v34i04.5765

- Li M, Lin J, Ding Y, et al. GAN Compression: Efficient Architectures for Interactive Conditional GANs. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Published online June 2020. doi: 10.1109/cvpr42600.2020.00533

- Jin Q, Ren J, Woodford OJ, et al. Teachers Do More Than Teach: Compressing Image-to-Image Models. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Published online June 2021. doi: 10.1109/cvpr46437.2021.01339

- Li S, Lin M, Wang Y, et al. Learning Efficient GANs for Image Translation via Differentiable Masks and Co-Attention Distillation. IEEE Transactions on Multimedia. 2023; 25: 3180-3189. doi: 10.1109/tmm.2022.3156699

- Cortes C, Mohri M, Rostamizadeh A. Algorithms for learning kernels based on centered alignment. The Journal of Machine Learning Research. 2012; 13(1): 795-828.

- Cristianini N, Shawe-Taylor J, Elisseeff A, et al. On Kernel-Target Alignment. Advances in Neural Information Processing Systems 14. Published online November 8, 2002: 367-374. doi: 10.7551/mitpress/1120.003.0052

- Kornblith S, Norouzi M, Lee H, et al. Similarity of neural network representations revisited. International Conference on Machine Learning. PMLR, 2019.

- Zagoruyko S, Komodakis N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv preprint arXiv:1612.03928 (2016).

- Heusel M, Ramsauer H, Unterthiner T, et al. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in Neural Information Processing Systems 30 (2017).

- Mittal G, Marwah T, Balasubramanian VN. Sync-DRAW. Proceedings of the 25th ACM international conference on Multimedia. Published online October 19, 2017. doi: 10.1145/3123266.3123309

Supporting Agencies

Copyright (c) 2024 Huasong Han, Ziqing Li, Fei Fang, Fei Luo, Chunxia Xiao

License URL: https://creativecommons.org/licenses/by/4.0/

This site is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0).

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

.jpg)

.jpg)