Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

Metafusion: hybrid ML-based object recognition and GPU rendering for real-time 3D metaverse visualization

Vol 6, Issue 3, 2025

Download PDF

Abstract

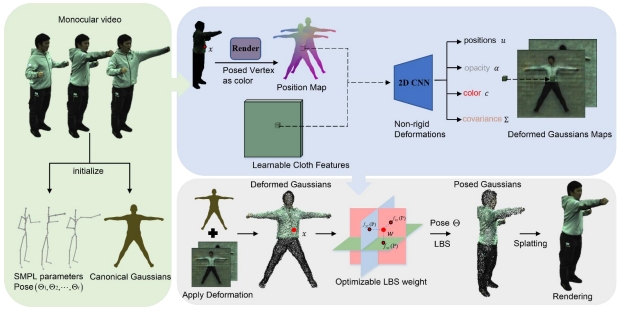

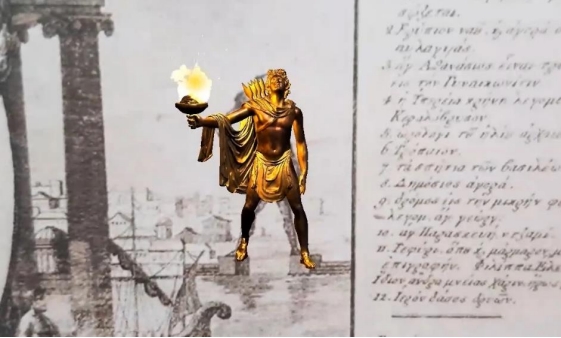

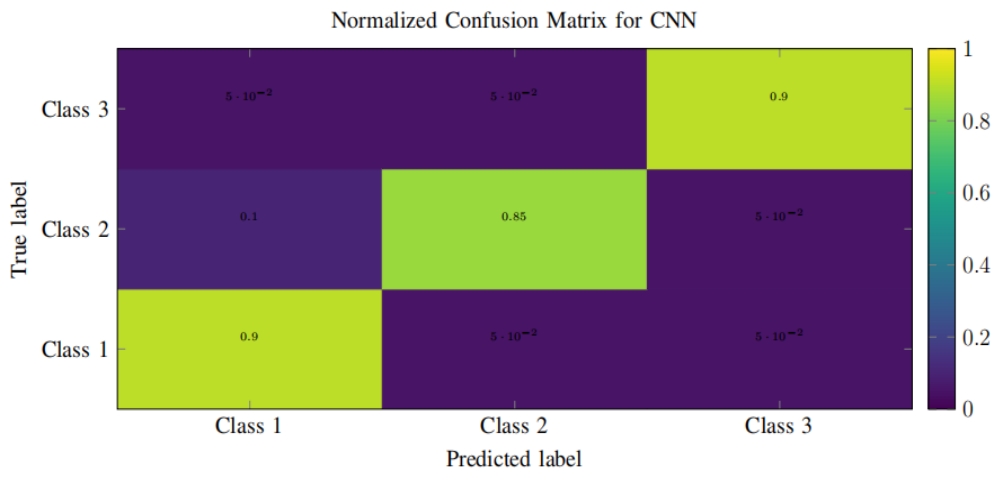

The metaverse, as a shared virtual collective space, holds unparalleled promise for engaging 3D experiences through augmented reality (AR) and virtual reality (VR). Despite notable progress, there still exists a void in the proper visualization of intricate data and environments in real-time. This article suggests a novel approach utilizing AR/VR technologies to enhance 3D visualization in the metaverse. Through the integration of real-time processing of data, multi-layered virtual environments, and advanced rendering methods, the envisioned system increases interaction, immersion, and scalability. The computational model relies on hybrid algorithms that integrate machine learning-based object recognition and GPU-based rendering efficiency. This work introduces a new hybrid method for improving real-time 3D visualization in Metaverse through the integration of machine learning (ML)-based object identification and GPU-based rendering. The system uses the identified importance of objects to dynamically adjust the level of detail (LOD) of individual objects in the scene to optimize rendering quality and computational performance. The major system components are an object recognition module that classifies and ranks objects in real-time and a GPU rendering pipeline that dynamically scales the rendering detail according to the priority of the objects. The algorithm tries to achieve the trade-off between high visual quality and system performance by using deep learning for precise object detection and GPU parallelism for efficient rendering. Experimental outcomes illustrate that the introduced system realizes considerable enhancements in rendering speed, interaction latency, and visual quality compared to common AR/VR rendering methods. The results confirm the prospects of fusing AI and graphics to develop more effective and visually sophisticated virtual environments.

Keywords

References

1. Kerbl A, Kopanas G, Leimkühler T, Drettakis G. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Transactions on Graphics. 2023;42(4). doi: 10.1145/3592433.

2. Saxena P, Aggarwal SK, Sinha A, Saxena S, Singh AK. Review of computer assisted diagnosis model to classify follicular lymphoma histology. Cell Biochemistry & Function. 2024;42(5):e4088. doi: 10.1002/cbf.4088.

3. Müller T, Evans A, Schied C, Keller A. Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Transactions on Graphics. 2022;41(4). doi: 10.1145/3528223.3530127

4. Kim T-K, Jeong J, Lee J, Kim S. MetaTwin: Synchronizing Real and Virtual Avatars for the Metaverse. Proc. ACM VRST. 2022. doi: 10.1145/3562939.3565647.

5. Agarwal S, Dohare AK, Saxena P, Singh J, Singh I, Sahu UK. HDL ACO: Hybrid deep learning and ant colony optimization for ocular optical coherence tomography image classification. Scientific Reports. 2025;15:5888. doi: 10.1038/s41598-025-89961-7.

6. Radanliev P. The rise and fall of cryptocurrencies: defining the economic and social values of blockchain technologies, assessing the opportunities, and defining the financial and cybersecurity risks of the Metaverse. Financial Innovation. 2024;10(1):1–34. doi: 10.1186/s40854-023-00537-8.

7. Wang Y, Su Z, Zhang N, et al. A Survey on Metaverse: Fundamentals, Security, and Privacy. IEEE Communications Surveys & Tutorials. 2023;25(3). doi: 10.1109/COMST.2022.3202047.

8. Kim H, Kobori M, Figner S, Fuchs H. Meta-Objects: Interactive and Multisensory Virtual Objects. IEEE Computer Graphics and Applications. 2025;45(1). doi: 10.1109/MCG.2024.3459007.

9. Newcombe RA, Izadi S, Hilliges O, et al. KinectFusion: Real-Time Dense Surface Mapping and Tracking. ISMAR. 2011:127–136. doi: 10.1109/ISMAR.2011.6092378.

10. Zhang S. Big-Data Technologies and Applications to Constructing Virtual Reality Worlds. Mathematics. 2022;10(11):2001. doi: 10.3390/math10112001.

11. Chow Y-W, Susilo W, Li Y, Li N, Nguyen C. Visualization and Cybersecurity in the Metaverse: A Survey. Journal of Imaging. 2023;9(1):11. doi: 10.3390/jimaging9010011.

12. Memarsadeghi N, et al. Virtual and Augmented Reality Applications in Science and Engineering. In: Real VR – Immersive Digital Reality. 2020.

13. Kraus M, Fuchs J, Sommer B, Klein K. Immersive Analytics with Abstract 3D Visualizations: A Survey. Computer Graphics Forum. 2022;41(3). doi: 10.1111/cgf.14430.

14. Konrad R, Angelopoulos A, Wetzstein G. Gaze-Contingent Ocular Parallax Rendering for Virtual Reality. ACM Transactions on Graphics. 2020;39(2). doi: 10.1145/3306307.3328201.

15. Mantiuk RK, Denes G, Chapiro A, et al. FovVideoVDP: A Visible Difference Predictor for Wide Field-of-View Video. ACM Transactions on Graphics. 2021;40(6). doi: 10.1145/3450626.3459831.

16. Krajancich B, Kellnhofer P, Wetzstein G. Optimizing Depth Perception in VR/AR through Gaze-Contingent Stereo Rendering. ACM Transactions on Graphics. 2020;39(6). doi: 10.1145/3414685.3417820.

17. Illahi GK, Ouerhani N, et al. Real-Time Gaze Prediction in Virtual Reality. Proc. MMVE’22. 2022. doi: 10.1145/3534086.3534331.

18. Deng J, et al. FoV-NeRF: Foveated Neural Radiance Fields for VR. arXiv. 2021. arXiv:2111.13064.

19. Xiang Y, Schmidt T, Narayanan V, Fox D. PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation. International Journal of Computer Vision. 2020;128:121–151. doi: 10.1007/s11263-019-01240-7.

20. Tewari A, Thies J, Mildenhall B, et al. State of the Art on Neural Rendering. Computer Graphics Forum. 2020;39(2). doi: 10.1111/cgf.14022.

21. Patney A, Kim J, Salvi M, et al. Towards Foveated Rendering for Gaze-Tracked Virtual Reality. ACM Transactions on Graphics. 2016;35(6):179. doi: 10.1145/2980179.2980246.

22. Mur-Artal R, Tardós JD. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Transactions on Robotics. 2017;33(5):1255–1262. doi: 10.1109/TRO.2017.2705103.

23. Sun L, et al. Dynamic Foveated Radiance Fields for Head-Mounted Displays. arXiv. 2021. arXiv:2103.05799.

24. Zhang Y, Dong J, Chen W, et al. A Survey of Immersive Visualization: Focus on Perception and Interaction. Visual Informatics. 2023;7(4):1–24. doi: 10.1016/j.visinf.2023.06.002.

25. Martin-Brualla R, Radwan N, Sajjadi MSM, et al. NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. CVPR. 2021:7210–7219. doi: 10.1109/CVPR46437.2021.00713.

26. Fanini B, Gosti G. A New Generation of Collaborative Immersive Analytics on the Web. Future Internet. 2024;16(5):147. doi: 10.3390/fi16050147.

27. Wetzstein G, Patney A, Sun Q. State of the Art in Perceptual VR Displays. In: Real VR – Immersive Digital Reality. 2020.

28. Saffo D, Di Bartolomeo S, Crnovrsanin T, South L, Dunne C. Unraveling the Design Space of Immersive Analytics: A Systematic Review. IEEE Transactions on Visualization and Computer Graphics. 2024;30(1). doi: 10.1109/TVCG.2023.3327368.

29. Kaplanyan A, et al. DeepFovea: Neural Reconstruction for Foveated Rendering and Video Compression Using Natural Video Statistics. ACM Transactions on Graphics. 2019;38(6):212. doi: 10.1145/3355089.3356557.

30. Chaitanya CRA, Kaplanyan A, Schied C, et al. Real-Time Content- and Motion-Adaptive Shading. ACM Transactions on Graphics. 2019;38(4). doi: 10.1145/3320287.

31. Wang L, Deng X, Wonka P, et al. Foveated Rendering: A State-of-the-Art Survey. Computational Visual Media. 2023;9:3–30. doi: 10.1007/s41095-022-0306-4.

32. Liu J, Mantel C, Forchhammer S. Perception-Driven Hybrid Foveated Depth-of-Field Rendering for Head-Mounted Displays. IEEE VR Workshops. 2021.

33. Al-Oqla FM, Nawafleh N. Artificial intelligence and machine learning for additive manufacturing composites toward enriching Metaverse technology. Metaverse. 2024;5(2):2785. doi: 10.54517/m.v5i2.2785.

34. P. Saxena, M. Sharma, R. Batra, R. Gupta, M. Singh and S. K. Singh, "XAI for Lymph Node Histopathology: From Explainability to Immersive Metaverse Applications," 2025 7th International Conference on Signal Processing, Computing and Control (ISPCC), SOLAN, India, 2025, pp. 304-309, doi: 10.1109/ISPCC66872.2025.11039319.

35. Zhang L. Editorial: Navigating the convergence of AI and the Metaverse. Metaverse. 2024;5(2):3219. doi: 10.54517/m.v5i2.3219.

36. Yun CO, Yun TS. Expanding metaverse market: New opportunities and challenges for the content industry. Metaverse. 2024;5(2):2920. doi: 10.54517/m.v5i2.2920.

37. Kenig N, Muntaner Vives A. The role of humans in the future of medicine: Completing the cycle. Metaverse. 2025;6(1):3129. doi: 10.54517/m3129.

38. Pan Z. Top 10 application scenarios in Metaverse. Metaverse. 2023;4(1):2202. doi: 10.54517/m.v4i1.2202.

39. Mildenhall B, Srinivasan PP, Tancik M, Barron JT, Ramamoorthi R, Ng R. NeRF: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM. 2022;65(1):99–106. doi: 10.1145/3503250.

Supporting Agencies

Copyright (c) 2025 Author(s)

This work is licensed under a Creative Commons Attribution 4.0 International License.

This site is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0).

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

.jpg)

.jpg)