Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

Efficient transfer attacks via enhancing perturbation robustness

Vol 5, Issue 2, 2024

Download PDF

Abstract

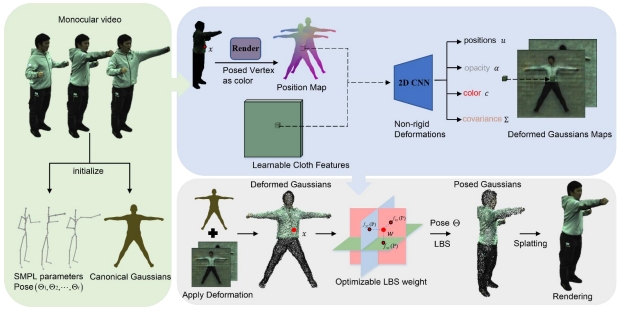

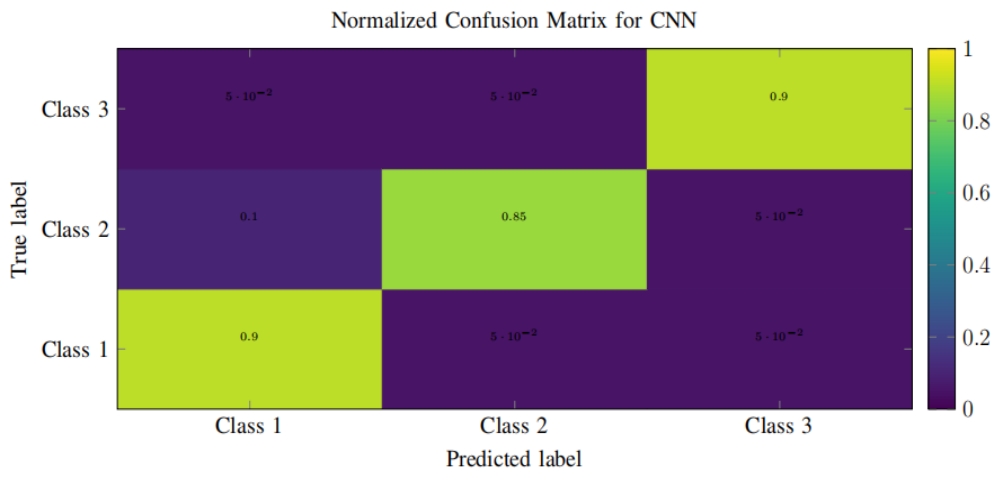

With the rapid development of deep learning technology, artificial intelligence (AI) has found wide applications in diverse domains such as image classification, text processing, and autonomous driving. However, the increasing prevalence of security issues cannot be ignored. Studies have shown that deep neural network models face security risks due to adversarial sample attacks. These attacks involve adding imperceptible perturbations to deceive the model’s classification results, exposing vulnerabilities in deep learning model applications. While transfer attack methods offer practicality in real-world scenarios, their current performance in black-box attacks is limited. In this study, we propose a method that combines an attention mechanism and a frequency domain transformation to enhance the robustness of adversarial perturbations, thereby improving the performance of transfer attacks in black-box attack scenarios of deep learning models. Specifically, we introduce the CBAM-ResNet50 enhancement model based on attention mechanisms into transfer attacks, enhancing the model’s ability to identify important image regions. By adding perturbations to these attention-concentrated regions, adversary perturbation robustness is improved. Furthermore, we introduce a method for randomly transforming image enhancement in the frequency domain, which increases the diversity and robustness of adversarial perturbation by distributing perturbations across edges and textures. Experimental results demonstrate that our proposed method, considering both human perceptibility and computational cost, achieves a maximum black-box transfer attack success rate of 60.05%, surpassing the 49.65% success rate achieved by the NI-FGSM method across three models. The average success rate of the five methods exceeds an improvement of 6 percentage points in black-box attacks.

Keywords

References

- Szegedy C, Zaremba W, Sutskever I, et al. Intriguing properties of neural networks [J]. arXiv preprint arXiv:1312.6199. 2013

- Wang J, Yin Z, Jiang J, et al. Attention‐guided black‐box adversarial attacks with large‐scale multi-objective evolutionary optimization. International Journal of Intelligent Systems. 2022; 37(10): 7526–7547. doi: 10.1002/int.22892

- Aslan MF, Celik Y, Sabanci K, et al. Breast Cancer Diagnosis by Different Machine Learning Methods Using Blood Analysis Data. International Journal of Intelligent Systems and Applications in Engineering. 2018; 6(4): 289–293. doi: 10.18201/ijisae.2018648455

- Viaene S, Baesens B, Van Gestel T, et al. Knowledge discovery in a direct marketing case using least squares support vector machines. International Journal of Intelligent Systems. 2001; 16(9): 1023–1036. doi: 10.1002/int.1047

- AL‐Rousan N, Mat Isa NA, Mat Desa MK, et al. Integration of logistic regression and multilayer perceptron for intelligent single and dual axis solar tracking systems. International Journal of Intelligent Systems. 2021; 36(10): 5605–5669. doi: 10.1002/int.22525

- Alarab I, Prakoonwit S. Adversarial Attack for Uncertainty Estimation: Identifying Critical Regions in Neural Networks. Neural Processing Letters. 2021; 54(3): 1805–1821. doi: 10.1007/s11063-021-10707-3

- Wang Y, Yang G, Li T, et al. Optimal mixed block withholding attacks based on reinforcement learning. International Journal of Intelligent Systems. 2020; 35(12): 2032–2048. doi: 10.1002/int.22282

- Andriushchenko M, Flammarion N. Understanding and improving fast adversarial training. Advances in Neural Information Processing Systems. 2020; 33: 16048–16059

- Zhang Y, Liang P. Defending against whitebox adversarial attacks via randomized discretization. In: Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics. PMLR. 2019; 684–693

- Tramèr F, Kurakin A, Papernot N, et al. Ensemble Adversarial Training: Attacks and Defenses. In: Proceedings of the International Conference on Learning Representations. 2018

- Kwon H, Lee S. Ensemble transfer attack targeting text classification systems. Computers & Security. 2022; 117: 102695. doi: 10.1016/j.cose.2022.102695

- Zhang Y, Tan Y, Chen T, et al. Enhancing the Transferability of Adversarial Examples with Random Patch. In: Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence; July 2022. doi: 10.24963/ijcai.2022/233

- Zhang C, Benz P, Karjauv A, et al. Investigating Top-k White-Box and Transferable Black-box Attack. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); June 2022. doi: 10.1109/cvpr52688.2022.01466

- Li Z, Yin B, Yao T, et al. Sibling-Attack: Rethinking Transferable Adversarial Attacks against Face Recognition. In: Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); June 2023. doi: 10.1109/cvpr52729.2023.02359

- Zhu H, Sui X, Ren Y, et al. Boosting transferability of targeted adversarial examples with non-robust feature alignment. Expert Systems with Applications. 2023; 227: 120248. doi: 10.1016/j.eswa.2023.120248

- Zhang J, Wu W, Huang J, et al. Improving Adversarial Transferability via Neuron Attribution-based Attacks. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); June 2022. doi: 10.1109/cvpr52688.2022.01457

- Liu Y, Cheng Y, Gao L, et al. Practical Evaluation of Adversarial Robustness via Adaptive Auto Attack. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 2022. doi: 10.1109/cvpr52688.2022.01468

- Fu C, Li S, Yuan X, et al. Ad2Attack: Adaptive Adversarial Attack on Real-Time UAV Tracking. In: Proceedings of the 2022 International Conference on Robotics and Automation (ICRA); 23 May 2022. doi: 10.1109/icra46639.2022.9812056

- Zhang Y, Shin SY, Tan X, et al. A Self-Adaptive Approximated-Gradient-Simulation Method for Black-Box Adversarial Sample Generation. Applied Sciences. 2023; 13(3): 1298. doi: 10.3390/app13031298

- Lin Y, Zhao H, Tu Y, et al. Threats of Adversarial Attacks in DNN-Based Modulation Recognition. In: Proceedings of the IEEE INFOCOM 2020-IEEE Conference on Computer Communications; July 2020. doi: 10.1109/infocom41043.2020.9155389

- Sun X, Cheng G, Li H, et al. Exploring Effective Data for Surrogate Training Towards Black-box Attack. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); June 2022. doi: 10.1109/cvpr52688.2022.01492

- Cai Z, Song C, Krishnamurthy S, et al. Blackbox attacks via surrogate ensemble search. Advances in Neural Information Processing Systems. 2022; 35: 5348–5362

- Chen J, Jordan MI, Wainwright MJ. HopSkipJumpAttack: A Query-Efficient Decision-Based Attack. In: Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP); May 2020. doi: 10.1109/sp40000.2020.00045

- Wang Z, Guo H, Zhang Z, et al. Feature importance-aware transferable adversarial attacks. In: Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV); October 2021. doi:10.1109/iccv48922.2021.00754

- Huang Q, Katsman I, Gu Z, et al. Enhancing Adversarial Example Transferability with an Intermediate Level Attack. In: Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV); October 2019. doi: 10.1109/iccv.2019.00483

- Goodfellow IJ, Shlens J, Szegedy C. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572. 2014

- Kurakin A, Goodfellow IJ, Bengio S. Adversarial Examples in the Physical World. Artificial Intelligence Safety and Security. 2018; 99–112. doi: 10.1201/9781351251389-8

- Dong Y, Liao F, Pang T, et al. Boosting Adversarial Attacks with Momentum. In: Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; June 2018. doi: 10.1109/cvpr.2018.00957

- Lin J, Song C, He K, et al. Nesterov Accelerated Gradient and Scale Invariance for Adversarial Attacks. International Conference on Learning Representations. 2020

- Madry A, Makelov A, Schmidt L, et al. Towards Deep Learning Models Resistant to Adversarial Attacks. In: Proceedings of the International Conference on Learning Representations; 2018

- Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. arXiv preprint arXiv:1706.03762. 2017

- Woo S, Park J, Lee J Y, et al. Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV); 2018: 3–19

- Xie C, Zhang Z, Zhou Y, et al. Improving Transferability of Adversarial Examples with Input Diversity. In: Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); June 2019. doi: 10.1109/cvpr.2019.00284

- Huang Y, & Kong AWK. Transferable adversarial attack based on integrated gradients. arXiv preprint arXiv:2205.13152.

- Zhao A, Chu T, Liu Y, et al. Minimizing Maximum Model Discrepancy for Transferable Black-box Targeted Attacks. In: Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); June 2023. doi: 10.1109/cvpr52729.2023.00788

- Feng Y, Wu B, Fan Y, et al. Boosting Black-Box Attack with Partially Transferred Conditional Adversarial Distribution. In: Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). June 2022. doi: 10.1109/cvpr52688.2022.01467

Supporting Agencies

Copyright (c) 2024 Chengzhi Zhong, Jipeng Hu, Mengda Xie, Meie Fang

License URL: https://creativecommons.org/licenses/by/4.0/

This site is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0).

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

.jpg)

.jpg)