Asia Pacific Academy of Science Pte. Ltd. (APACSCI) specializes in international journal publishing. APACSCI adopts the open access publishing model and provides an important communication bridge for academic groups whose interest fields include engineering, technology, medicine, computer, mathematics, agriculture and forestry, and environment.

Research on object placement method based on trajectory recognition in Metaverse

Vol 2, Issue 2, 2021

Download PDF

Abstract

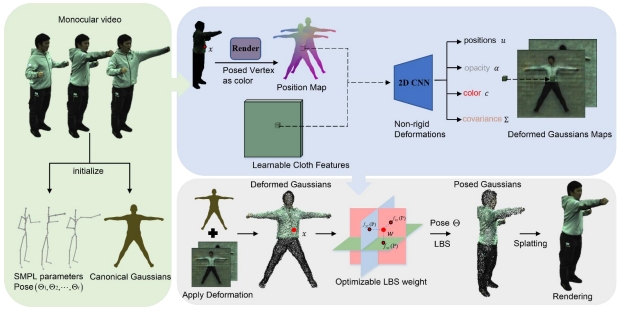

Many studies focus on only one aspect while placing objects in virtual reality environment, such as efficiency, accuracy or interactivity. However, striking a balance between these aspects and taking into account multiple indicators is important as it is the key to improving user experience. Therefore, this paper proposes an efficient and interactive object placement method for recognizing controller trajectory in virtual reality environment. For creating user-friendly feedback, we visualize the intersection of the ray and the scene by linking the controller motion information and the ray. The trajectory is abstracted as point-clouds for matching, and the corresponding object is instantiated at the center of the trajectory. To verify the interactive performance and user satisfaction with this method, we carry out a study on user experience. The results show that both the efficiency and interaction interest are improved by applying our new method, which provides a good idea for the interactive design of virtual reality layout applications.

Keywords

References

- Zheng C. Key technologies of the metaverse and their similarities and differences with digital twin. Network Security Technology and Application 2022; (09): 124–126.

- Lang B. Analysis: Monthly-connected VR headsets on steam blast through 3 million milestone [Internet]. 2021. Available from: https://www.roadtovr.com/ monthly-connected-vr-headsets-steam-survey-january-2022/

- Zhang L, Pan H. Research on VR reading user interaction behavior under empowerment. Library Forum 2022; 1–12.

- Peña Arcila JB. Master of Ibero American virtual environment education. Metaverse 2020; 1(1): 11.

- Fang L, Shen H. Conceptualizing metaverse: A perspective from technology and civilization. Metaverse 2021; 2(2): 18.

- Shin DH. User experience in social commerce: In friends we trust. Behaviour & Information Technology 2013; 32(1): 52–67.

- Park M, Yoo J. Effects of perceived interactivity of augmented reality on consumer responses: A mental imagery perspective. Journal of Retailing and Consumer Services 2020; 52:101–912.

- Caputo FM, Prebianca P, Carcangiu A, et al. Comparing 3d trajectories for simple mid-air gesture recognition. Computers & Graphics 2018; 73: 17–25.

- Li Y (editor). Protractor: A fast and accurate gesture recognizer. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; 2010 Apr 10-15; Atlanta Georgia. New York: ACM Press; 2010. p. 2169–2172.

- Wobbrock JO, Wilson AD, Li Y (editors). Gestures without libraries, toolkits or training: A $1 recognizer for user interface prototypes. Proceedings of the 20th Annual ACM Symposium on User Interface Software and Technology; 2007 Oct 7-10; Newport. New York: Association for Computing Machinery; 2007. p. 159–168.

- Anthony L, Wobbrock JO. A lightweight multistroke recognizer for user interface prototypes. Proceedings of Graphics Interface; 2010 May 31- June 2; Ottawa. Toronto: Canadian Information Processing Society; 2010. p. 245–252.

- Anthony L, Wobbrock JO. $ N-protractor: A fast and accurate multistroke recognizer. Proceedings of Graphics Interface; 2012 May 28-30; Toronto. Toronto: Canadian Information Processing Society; 2012. p. 117–120.

- Vatavu RD, Anthony L, Wobbrock JO (editors). Gestures as point clouds: A $ p recognizer for user interface prototypes. Proceedings of the 14th ACM International Conference on Multimodal Interaction; 2012 Oct 22-26; Santa Monica. New York: Association for Computing Machinery; 2012. p. 273–280.

- Vatavu RD (editor). Improving gesture recognition accuracy on touch screens for users with low vision. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems; 2017 May 6-11; Denver. New York: Association for Computing Machinery; 2017. p. 4667–4679.

- Vatavu RD, Anthony L, Wobbrock JO (editors). $ q: A super-quick, articulation-invariant stroke-gesture recognizer for low-resource devices. Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services; 2018 Sep 3-6; Barcelona. New York: Association for Computing Machinery; 2018. p. 1–12.

- Kratz S, Rohs M. A $3 gesture recognizer: Simple gesture recognition for devices equipped with 3d acceleration sensors. Proceedings of the 15th International Conference on Intelligent User Interfaces; 2010 Feb 7-10; Hong Kong. New York: Association for Computing Machinery; 2010. p. 341–344.

- Wobbrock JO, Wilson AD, Li Y. Gestures without libraries, toolkits or training: A $1 recognizer for user interface prototypes. Proceedings of the 20th annual ACM symposium on User interface software and technology; 2007 Oct 7-10; Newport. New York: Association for Computing Machinery; 2007. p. 159–168.

- Caputo FM, Prebianca P, Carcangiu A, et al. A 3 cent recognizer: Simple and effective retrieval and classification of mid-air gestures from single 3d traces. In: Giachetti A, Pingi P, Stanco F (editors). STAG 2017 - Smart Tools and Applications in Graphics; 2017 Sep 11-12; Catania. Reims: Eurographics Association; 2017. p. 9–15.

- Kratz S, Rohs M (editors). Protractor 3d: A closed-form solution to rotation invariant 3d gestures. Proceedings of the 16th International Conference on Intelligent User Interfaces; 2011 Feb 13-16; Palo Alto. New York: Association for Computing Machinery; 2011. p. 371–374.

- Liu J, Zhong L, Wickramasuriya J, et al. uWave: Accelerometer-based personalized gesture recognition and its applications. Pervasive and Mobile Computing 2009; 5(6): 657–675.

- Ousmer M, Sluyters A, Magrofuoco N, et al. (editors). Recognizing 3d trajectories as 2d multi-stroke gestures. Proceedings of the ACM on Human-Computer Interaction 2020; 4(ISS): 1–21.

- Eroglu S, Stefan F, Chevalier A, et al. (editors). Design and evaluation of a free-hand VR-based authoring environment for automated vehicle testing. 2021 IEEE Virtual Reality and 3D User Interfaces (VR); 2021 Mar 27-Apr 1; Lisboa. New York: IEEE; 2021. p. 1–10.

- Mine M. Isaac: A virtual environment tool for the interactive construction of virtual worlds [Internet]. 1995 [updated 1995 May 5]. Available from: https://www.semanticscholar.org/paper/ISAAC%3A-A-Virtual-Environment-Tool-for-the-of-Worlds-Mine/e0e2b7972b6504d17c60d33ad87f856fda376b66

- Burns A, Sugden B, Massey L, et al. Gaze-based object placement within a virtual reality environment. U.S. Patent. 10,416,760. 2019 Sept 17.

- Mapes DP, Moshell JM. A two-handed interface for object manipulation in virtual environments. Presence Teleoperators & Virtual Environments 1995; 4(4): 403–416.

- Kan P, Kaufmann H (editors). Automatic furniture arrangement using greedy cost minimization. 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR); 2018 Mar 18-22; Reutlingen. New York: IEEE; 2018. p. 491–498.

- Gal R, Shapira L, Ofek E, et al. (editors). Flare: Fast layout for augmented reality applications. 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR); 2014 Sept 10-12; Munich. New York: IEEE; 2014. p. 207–212.

- Germer T, Schwarz M. Procedural arrangement of furniture for real-time walkthroughs. Computer Graphics Forum 2009; 28: 2068–2078.

- Merrell P, Schkufza E, Li Z, et al. Interactive furniture layout using interior design guidelines. ACM Transactions on Graphics (TOG) 2011; 30(4): 1–10.

- Dou H, Tanaka J. A mixed-reality shop system using spatial recognition to provide responsive store layout. International Conference on Human-Computer Interaction; 2020 Jul 19-24; Copenhagen. New York: Springer, Cham; 2020. p. 18–36.

- Ferreira J, Mendes D, Nobrega R, et al. (editors). Immersive multimodal and procedurally-assisted creation of VR environments. 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW); 2021 Mar 31-Apr 1; Lisbon. New York: IEEE; 2021. p. 30–37.

- Barot C, Carpentier K, Collet M, et al. (editors). The wonderland builder: Using storytelling to guide dream-like interaction. 2013 IEEE Symposium on 3D User Interfaces (3DUI); 2013 Mar 16-17; Orlando. New York: IEEE; 2013. p. 201–202.

- Saaty TL. What is the analytic hierarchy process? In: Mitra G, Greenberg HJ, Lootsma FA, et al. (editors). Mathematical models for decision support. Berlin, Heidelberg: Springer; 1988. p. 109–121.

Supporting Agencies

Copyright (c) 2021 Ke Lang, Xiaoying Nie, Yongjian Huai, Yuanyuan Chen

This work is licensed under a Creative Commons Attribution 4.0 International License.

This site is licensed under a Creative Commons Attribution 4.0 International License (CC BY 4.0).

Prof. Zhigeng Pan

Professor, Hangzhou International Innovation Institute (H3I), Beihang University, China

Prof. Jianrong Tan

Academician, Chinese Academy of Engineering, China

Conference Time

December 15-18, 2025

Conference Venue

Hong Kong Convention and Exhibition Center (HKCEC)

...

Metaverse Scientist Forum No.3 was successfully held on April 22, 2025, from 19:00 to 20:30 (Beijing Time)...

We received the Scopus notification on April 19th, confirming that the journal has been successfully indexed by Scopus...

We are pleased to announce that we have updated the requirements for manuscript figures in the submission guidelines. Manuscripts submitted after April 15, 2025 are required to strictly adhere to the change. These updates are aimed at ensuring the highest quality of visual content in our publications and enhancing the overall readability and impact of your research. For more details, please find it in sumissions...

.jpg)

.jpg)